Getting Started: AI Risk Analysis

Overview

Manifest’s AI Risk module offers organizations three core experiences designed to give visibility into their end to end AI Supply Chain Risk.

Analyze open source and open weight models on Hugging Face directly in Manifest

Learn what information Manifest provides on AI Models, and what it means for your organization

Learn how we improve your AI supply chain security

Set org policies around AI model countries of origin, datasets, software dependencies and other risk signals

Enforce AI governance directly during model training and tuning, with built-in policy checks and effortless model card generation

Detect and manage hidden AI models in your codebase for full transparency and compliance

Get started with AI Model Risk Analysis

Manifest can help you save time and find more results on your risk analysis of open weight AI models! Lets get you started with an analysis to understand what you can do.

For models that have been modified and tuned, please see AI Model Development Analysis

For an initial analysis, we will use a Hugging Face URL tied to a model that might be used in a piece of software.

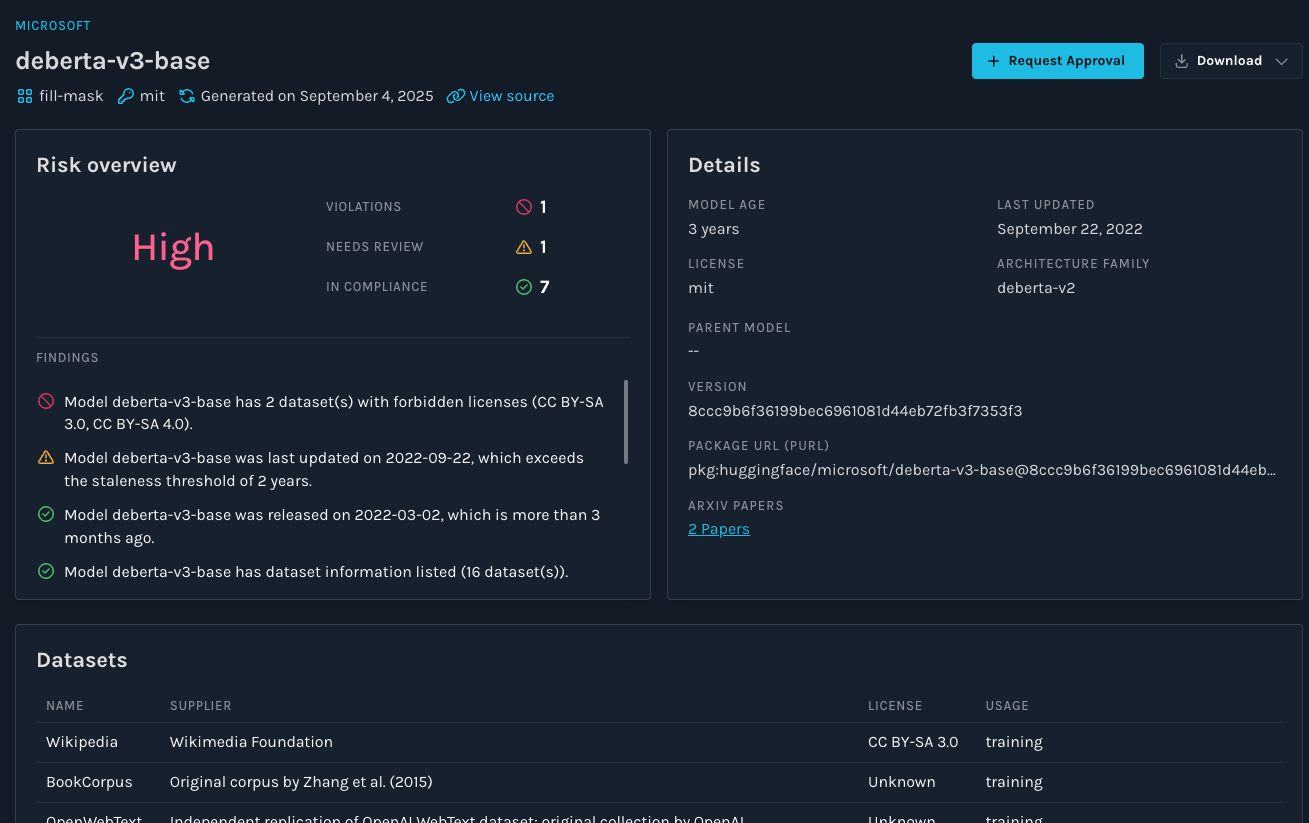

DeBERTaV3: https://huggingface.co/microsoft/deberta-v3-base

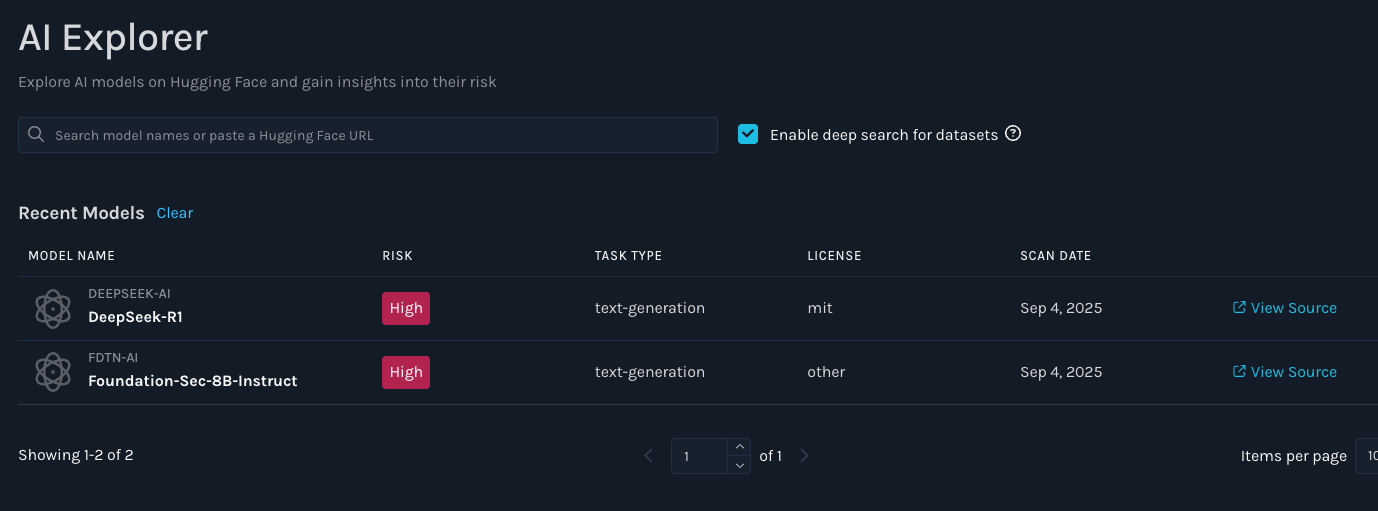

You will notice on this page that there is no information about the datasets that were used to train this model, we can quickly find out that information, lets go to app.manifestcyber.com and open up the AI Explorer.

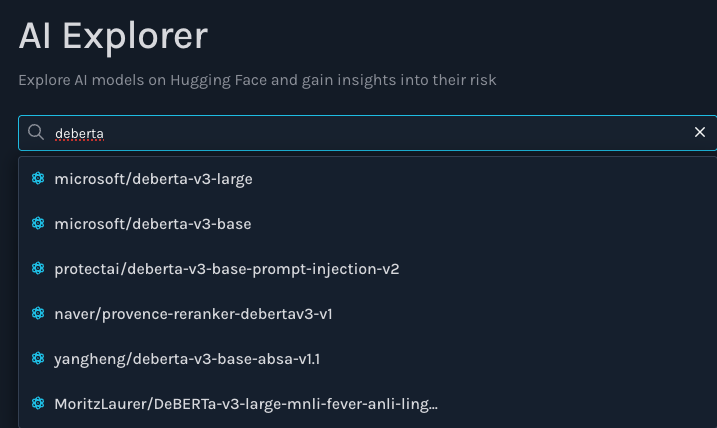

Now that we are on the AI Explorer page, we can take the name of the model, or the Hugging Face URL and input it into the text box to search for the model.

When you search with the model name, Manifest will show you recommendations for models that match up with that name. Select the most relevant name such as "microsoft/deberta-v3-base". Go ahead and click on the relevant model to kick off the analysis.

Once the analysis is complete, we can look at the results. We find that there are datasets with some licenses our risk policy forbids by default. We also see that there are many datasets listed, which are not easily discoverable on the Hugging Face link to this page. In addition to that, the model is a bit outdated and we likely want to find a fresher model to ensure we are using a good model.

Additional Models:

Here are some additional models you might want to try analysis on:

- Falcon 7B (Software dependencies due to the AI Model)

- Foundation Sec 8B Instruct (The parent model of the parent model is Llama 3.1)

- Deepseek R1 (From a restricted country, ensure you set your risk policies to restrict China first.)

Whats Next:

- Set your AI Risk Policies

- Set org policies around AI model countries of origin, datasets, software dependencies and other risk signals

- Get immediate risk feedback with our Python plugin

- This is for developers and data scientists to get direct feedback during development

- Start detecting Shadow AI in your Source Code

- Detect hidden AI models in your source code code through the CLI

- Build out your AI Model Inventory

- This allows you to track what models you have approved

Updated about 2 months ago